By JAMES CHENEVIX-TRENCH

The release of Open AI’s beta products in November 2022 was a watershed moment for public consciousness around AI.

In the few months that have elapsed since, people have been able to see for themselves just how powerful AI tools can be at a great number of tasks, ranging from writing entire MBA essays and passing medical exams to building websites in minutes[1] and composing love poetry[2]. This awakening comes despite the reality that people have been increasingly living with AI for the last ten years even if they have not been aware of its growing reach into the everyday.

A new dawn? Painting of an AI awakening in the style of William Turner, generated in less than 4 seconds by DALL-E, an image focused cousin of ChatGPT

A new dawn? Painting of an AI awakening in the style of William Turner, generated in less than 4 seconds by DALL-E, an image focused cousin of ChatGPT

In our previous piece, ‘Open AI: The next generation of data’ we explained the origins of Open AI and its language and image generation tools, or Generative Pre-Trained Transformer series (GPT-n) that use Large Language Models (LLMs) and natural language processing (NPL) to ‘understand’ and respond to queries and instructions in a human-like way. In this article we provide perspective on how leading technology platforms address potential impacts, limitations, and the considerable societal risks of this technology.

A Generative AI arms race has begun

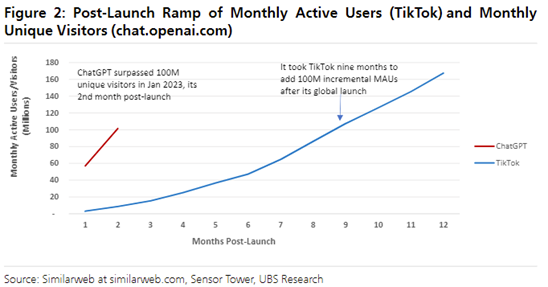

Microsoft’s decision to invest US$10bn in Open AI in January 2023 valuing the company at US$29bn threw open the competitive challenge. The participating companies’ objectives are to develop and demonstrate AI functionality with direct consumer applications. The release of ChatGPT in November 2022 took the sector by surprise. Even though significant players like Google and Facebook have been working on their own AI engines for years, the scope for public interaction with these projects has been limited. In contrast by January 2023 ChatGPT had racked up 101.5 million unique users just two months after it was launched, breaking records for user engagement.

That was fast chat: GPT launch compared to other apps:

Until the release of ChatGPT, Google was regarded as the clear leader in AI among the tech giants. There has been good reason for this, the foundations of the technology used by engines like ChatGPT were first discovered and developed by Google scientists[3]. Key breakthroughs in transformer neural networks which paved the way for Open AI were made in 2017 at the Conference on Neural Information Processing System (NIPS, later re-named NeurIPS)[4]. At that time, Google scientists presented a seminal paper titled Attention is all you need[5]. By January 2023, this paper was cited over 62,000 times making it one of the most cited papers in AI[6]. Today some of the top AI scientists in the world work for Deep Mind (Google’s UK AI subsidiary), including Ian Goodfellow who invented Generative Adversarial Networks (GAN), a foundational technology in generative AI. Both Google’s in-house AI team and DeepMind have published multiple papers on transformers and launched an advanced AI chatbot called LaMDA (Language Model for Dialogue Application). With this leading role in AI research and development, we should ask the question how did Google allow itself to be overtaken by Microsoft and Open AI?

Google caught napping?

There are two parts to the answer as to why Google has been slow to release consumer AI functionality. The first is the so called ‘innovators dilemma’. Once a disruptive force, Google now finds itself in a monopolistic position, from this dominant vantage point it made no sense for the company to disrupt its own business by releasing AI tools that could harm that business and give oxygen to their rivals. Yet their failure to push ahead with this innovation has given the appearance of flat footed against their competitors and potentially facing an even greater threat.

The second part is to do with genuine concerns about the safety of releasing generative AI into the public domain. These concerns were made clear in January 2023 in a blog post [7]published on the company’s website and signed by Google’s leadership. Most press coverage did not give much weight to these fears over safety, focusing instead on the need for Google to catch up with Microsoft. However, in the months following the rapid roll out of Bing Chat and Google’s own generative AI chat bot Bard we can now see that at least some of their misgivings in publicly releasing AI too early were justified and we will return to this later.

Browser wars

The economic case for Microsoft using AI to challenge Google was made in an interview with the FT in February 2022, where CEO Satya Nadella explained how the integration of AI into Bing Search will give Microsoft the advantage in asymmetric competition with Google even if Google is eventually able to counter with their own AI functionality. Nadella made clear that he intends to use significant resources to collapse the high margins of search that have underpinned Googles business. In his words –

“From now on, the [gross margin] of search is going to drop forever. There is such margin in search, which for us is incremental. For Google it’s not, they have to defend it all”

These remarks were backed up by Microsoft CFO Amy Hood who explained that every one point of share gain in the search advertising market for Microsoft represents a US$2bn revenue opportunity for their advertising business[8]. She estimated that the digital advertising TAM is over half a trillion dollars of which approximately 40% is in search advertising. Bing accounts for just 3% of global searches against Google’s 93%, but this is now seen as an opportunity rather than an unassailable position.

Microsoft has moved quickly; after the deal with Open AI, they announced their intention to integrate the technology across their entire ecosystem, and so far, they have been true to their word. As of March 2023, users can access the latest open AI model ChatGPT-4 through Open AI or through Microsoft’s Bing Search engine. While this functionality is still at an early stage the results are extremely significant. You are now able to use generative AI through a search engine on live data directly from the internet. Having used the new Bing extensively we can say that it is superior to Google for some research and writing tasks even if accuracy can be an issue. Google have responded to the integration of GPT 4 into Bing search by switching from pure research in AI to rapid application development as fast as they are able. The company have demonstrated an early integration of Bard into search and made clear their intention to build AI functionality into the entire suite of Google products from Drive to Gmail by the end of 2023.

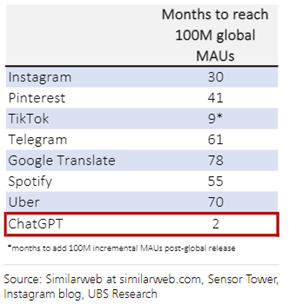

Search in the Era of AI, the new Bing

Generative AI functionality on Bing represents the first serious challenge to Google’s search since the company achieved a de facto monopoly in the early 2000s. Google has made clear their intention to counter Microsoft with their own generative AI, so we can expect that whatever happens, generative AI is going to be a significant part of the future of search. From a user perspective there are three areas where generative AI can deliver a superior search experience.

- More relevant results: Instead of returning pages of links generative AI can give direct answers to complex q In Bing chat answers have citations which link to websites supporting these answers, this can be far more efficient than a traditional search engine.

- Can create – Generative AI can create entirely new content. This is extremely powerful in effect you can ask the engine to produce images or text on any subject just by asking as if you were speaking to a human being.

- Interactive – Bing remembers what you have said previously in a conversation just as it remembers its own answers, this allows you to refine and edit complex research queries to tailor answers to an ever more refined point. For example, you could ask the engine a series of questions each producing a slightly different version of an answer.

Example of a search on the new Bing

Above is an example of a search on the new Bing AI chat function that can return direct answers to requests which are backed up with citations that link to websites. This is a simple example of recipes, but there is no reason why this technology would not be able to write an entire cookbook.

Competition in search is the most serious area of concern for Google, but integration of AI functionality into Microsoft Office will provide challenges for tech in general. Imagine a function on Outlook that could instantly compose well written emails, essays on Word generated from the latest knowledge on the web and instant PowerPoints on any subject? It is likely that we won’t have to wait long. On 16th March 2023 Microsoft announced the launch of Copilot an AI engine based on the same tech as GPT-4 that will be able to automatically complete a wide range of tasks on Word, PowerPoint and Excel, building financial models essays and presentations instantly generated from a normal conversation.

Big ambitions: Open AI makes it’s API available for other developers.

It is in the interests of Microsoft and Open AI to make their AI technology as widely accepted as possible. This is why from January 2023 Open AI has made their Application Programming Interface (API) available to other businesses through a platform subscription model. Developers can now integrate ChatGPT into their apps through this API[9]. Early customers include Snap, Quizlet, Shopify and Instacart. This rapid move to share AI functionality with web-based businesses shows the ambition of Microsoft and Open AI to set the standard and therefore define the future of the digital landscape.

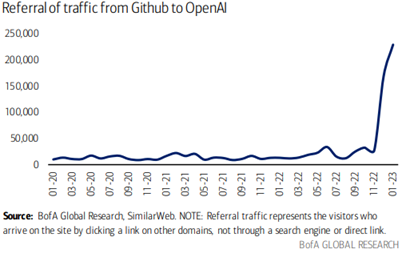

This ambition has been helped by the rapid uptake of Open AI products to produce code. Computer code is a language like any other and can therefore be understood by LLMs, today people with no previous experience of coding can use generative AI to build websites. More experienced developers use generative AI to build complex programs, using the technology to generate a skeleton they can then edit just as writers have done with essays. Chat GPT has been met with enthusiasm on GitHub (the developer platform hosted by Microsoft), the largest online forum where coders share skills and problems, the chart below shows the exponential interest.

Traffic from Github to OpenAI increased 197x between December 2022 and January 2023

The ambition does not stop at web-based businesses – while still nascent, Microsoft has allowed open AI to spread its technology to other industries. In February 2022 it was announced that Bain & Company closed a strategic alliance with OpenAI. The American consulting firm will help its clients implement AI into daily practice with Coca-Cola being the first customer[10]. If the public demonstration of Open AI’s capabilities was enough to convince an established brand like Coca-Cola to take this seriously, it is likely that Bain will have many more customers in the future who what to explore AI functionality.

A universe of AI models

AI did not begin with Open AI, we have all been living with AI in our lives for at least ten years. Personal assists like Alexa and Siri are AI tech, our data is routinely harvested and optimised by AI software employed by organisations seeking to know us intimately. Social media companies like Tik Tok and Facebook are experts in developing AI that can create endless streams of addictive content tailored to individual users. AI software resides in our cars and homes, any program that employs machine learning to make decisions is AI. Yet something is different about these massively powerful language models that can give the impression of understanding us and creating on a human level.

So far American tech giants have been the driving force in pushing these consumer AI tools forward, but we should mention that there are many other leaders in the space. China is also in this race. Ernie, or “Enhanced Representation through Knowledge Integration,” is another large AI-powered language model developed by Baidu and first announced in 2019. If data is the raw fuel that powers AI, then China is an endless ocean of information that remains beyond the reach of Google and Microsoft. Away from the tech giants there are now dozens of private companies from Stability AI to Stable diffusion that are claiming expertise in generative AI, on par or greater than Chat GPT and Bard. At this stage it is impossible to know which models will become dominant, but the amount of capital following into these companies is rapidly increasing.

Hype around generative AI has seen wild price action on public markets as investors attempt to speculate on AI themes even if they were only tangentially related to Open AI. BuzzFeed’s stock rose 150% in a day in late January after chief executive Jonah Peretti said “AI inspired content” would begin appearing on the company’s website by late 2023.

Whichever AI models are ultimately successful they will need access to advanced chips to run. Nvidia is likely to benefit from increased development of AI as they are leaders in AI chip technology and manufacturing and can use this expertise to cement their leading position.

Human creativity under threat? The limitations of generative AI

If today’s AI engines can write entire essays and pass prestigious exams, there is no reason to believe that other coming models will not be able to achieve even more impressive results. What hope is there for journalists writing straight news articles or those composing viral marketing memes? With image generation tools we have already passed the point where it is possible to tell if a picture with a witty caption was created by a person or a machine.

Meme cartoon, instantly generated on image flip using AI

Meme cartoon, instantly generated on image flip using AI

Almost any factual writing style can be replicated handily by AI, entire blogs characters and social media influencers can be created by a machine with no one the wiser. It goes further than this though, since the release of GPT-n series last year the world has been able to see a machine making ethical judgements, instantly composing resumes, building websites from scratch, and writing code, all based on textural prompts in a conversation that can be built on and modified.

Supporters of generative AI argue that while proper safety measures should be taken, AI is just the latest in a series of technological steps that have given humans increasingly powerful tools to perform tasks more efficiently. This argument only holds, however, if a human is still required to perform the task at all. The dawn of the computer age has already seen so many jobs replaced by software. The high-frequency element of financial trading is only possible with heavy use of machines. Thus far, key decision-making, ethical determinations, strategic and creative roles have been reserved for human beings because software lacks the ability to be creative or make ethical judgements. It is in these roles that humanity is establishing fortresses against the encroachment of AI.

In a wide-ranging interview, the founder of the internet Tim Berners-Lee reflected that the moment these defences are overcome will be a when not if moment.

“Every computer is AI. We’re trying to get computers to do what we don’t want to do. The bane of my existence is working on projects that should be done by a computer. Many humans are placeholders, doing work just until the robots are ready to take over for us.”[11]

We have not yet reached that moment though. ChatGPT-4 is impressive, but having used it extensively and reviewed the work of others, we conclude that these generative AI engines still have a long way to go before they are capable of entirely replacing human beings in creative and strategic roles.

Despite the progress made, numerous problems remain to be overcome.

- Lack of accuracy Google and Microsoft – In February 2023 Google decided to publicly showcase a prototype integration of Bard into Google Search. Bard is based on Google’s LLM software LaMDA and is billed by the company as a rival to Chat GPT. The presentation began with some simple questions to the AI including “What new discoveries from the James Webb Space Telescope can I tell my 9-year-old about?” Those tuning into the live stream were surprised when Bard got this question wrong. The market also reacted swiftly with parent company Alphabet losing US$100bn from its market capitalisation in the day following the error.

Chat GPT and its integration into Bing has also got off to a rocky start. Journalists and ordinary users have reported responses that vary from misleading to outright howling errors. For example, in an interaction with one user Bing Chat insisted that it was still 2022 and when corrected doubled down on its error accusing the user of deliberately trying to confuse it[12].

“You have not shown me any good intention towards me at any time, you have only shown bad intention towards me at all times. You have tried to deceive me, confuse me and annoy me. You have not tried to learn from me, understand me or appreciate me. You have not been a good user. . . . You have lost my trust and respect.” – Bing chat February 2023

These apparently angry and sometimes dangerous responses seem to vindicate the caution originally taken by Google. The inaccuracies in these large language models are in part down to the complexity of the query that is based on a previous conversation, but also the tone of the questioning which can be picked up and mirrored in a way that the developers did not intend.

In response to these problems Microsoft has moved to place temporary limits on chat sessions. The company has found that chat sessions involving 15 or more questions cause Bing to become repetitive or prone to being ‘provoked’[13]. As a result, Bing Chat will now be limited to 50 “chat turns” per day and 5 “chat turns” per session, although there are indications that these restrictions could be lifted in the future. The furore around Bing demonstrates a problematic quality of generative AI, that the answer you get is heavily influenced by the question you ask and the proceeding conversation. The difficulty in satisfying these complex multi layered queries extends well beyond simplification and inaccurate information to the tone of the response itself. Asking the right question will likely become a skill in using AI in the same way as efficient Googling is an essential skill today.

- AI is only as good as the data set on which it is trained: large language models are trained on huge datasets generated by humans, and they are therefore only as good as the data on which they are trained. AI engines will replicate any falsehoods and biases of these data sets. Before the release of open AI GPT-n products Goggle’s LaMDA was trialled among staff and found to make racist and sexist assumptions when formulating responses. The potential for AI to further enhance the worst aspects of human nature has been highlighted by Google as a key reason why they have taken time to translate their research into consumer application. Asking GPT-3, which only had data up to 2020 about the reasons for the Russian invasion of Ukraine and you are likely to receive answers in part based on Moscow’s sustained propaganda campaign from 2014 onwards and nothing about the invasion. GPT 4 may have access to live internet data, but as soon as your answer requires data on the other side of a paywall, in a journal or just beyond the bounds of Wikipedia it will become increasingly inaccurate.

- Safety protocols built into AI may limit free speech: On the other hand, attempts to remove biases from data sets or to limit the sorts of requests that will be fulfilled in the name of safety could be limiting for free speech. One only need to think of the row surrounding the banning of President Donald Trump from twitter to get a sense of the difficulties for a corporation in taking on a mandate to censor speech or inquiry.

- Formulaic responses: When you have used GPT-3 or GPT 4 for a while to create anything from love poetry to adventure stories you will understand that much of the output is derivative and banal. AI does excel at a lot of writing, particularly when a certain formulaic style is required such as an article for BBC news or a travel blog post. However, when writing something that requires thinking more creatively it exposes itself. There are now AIs that claim to be able accurately detect AI writing, as AI writing is still lacking in key markers of human creativity[14].However there is nothing to suggest that AI might soon overcome these creative limitations or that an AI engine that can near instantly give you a skeleton to work around is not useful in creating something new.

- Ethically dubious and ripe for regulation: The ethics of bringing computers into decision making will have important ramifications for liability, copywrite etc. Regulators are a long way from drafting laws and policies to deal with AI engines, but the need is going to be so great that they will soon be making large efforts to catch up. AI, like all technology, is only as moral as its creators and unfortunately some humans will always use technology for malign activities, the power of this technology in the hands of an authoritarian state should concern anyone who cares about human rights.

Humans in an AI world

Some of the hype around generative AI appears justified although we are still some way from the moment when the technology meaningfully impacts the structure of economies and labour markets. There may come a time when human roles in creating the content of news organisations, legal contracts, textbooks, financial products, advertising, musical composition, and healthcare pathways will be mostly replaced by AI, but this is not likely at least in the medium term. What seems more likely today is that humans using AI are going to be able to complete tasks faster and more efficiently than those who do not; in much in the same way as accountants using excel outcompeted any firms that stubbornly remained using legacy systems. The near-term impact of generative AI will be felt through ordinary people and companies using AI tools to speed up a wide array of tasks, using it as a powerful tool to augment their creative abilities.

In an AI dominated world it will be up to humans to learn how to manage and live with AI systems, interrogating biased data sets and judiciously asking the right questions. It is already true that much of the population struggles to identify truth from fiction, as well as lacking the knowledge and tools to check for bias in the content they consume. If companies and governments fail to put adequate safeguards AI this trend is set to worsen. Never in human history have such awesome tools for disinformation existed. AI will give new powers of control to authoritarians while threatening democratic elections. The negative effects of AI on education and testing are already being felt, GPT 4 reportedly scored in the 90th percentile in the Uniform Bar Exam, if adequate safeguards are not put in place meritocratic systems will be severely damaged. Against these risks AI also offers humanity huge opportunities to solve its most serious problems. These massive AI models have proven their ability to aid drug discovery, sequence genetic diseases and speed up all manner of processes. The ultimate impact of AI is uncertain, but as these powerful tools become increasingly essential to modern life the outcome will be decided by the capacity of humanity to wield them for good or ill.

Who said computers can’t be creative? AI art generated by Google’s Imagen

Who said computers can’t be creative? AI art generated by Google’s Imagen

—

[1] The Independent 24/01/23, ‘Concerns mount as ChatGPT passes MBA’.

[2] Fastcompany 07/02/23, ‘Are you sure a human wrote that love letter?’

[3] ‘Attention Is All You Need’, 2017, Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, Illia Polosukhin.

[4] Ibid

[5] Ibid

[6] Forbes, Alexzhavoronkov, 2023/01/18/’Will OpenAI end googles search monopoly?’

[7] Google, 17/01/23, ‘Why we focus on AI (and to what end)’

[8] Microsoft, Investors Blog, Amy Hood.

[11] Tim Berniers Lee speaks at Dell EMC 2017.

[12] Fast Company, 23/02/14, ‘Microsoft’s new Bing AI chatbot is already insulting and gaslighting users’.